Table of Contents

ToggleGPT 5.2 Problems Explained: Why Users Are Frustrated and What OpenAI Is Doing About It in 2025

Introduction

GPT 5.2 problems have become a hot topic among AI enthusiasts, developers, and everyday users who rely on ChatGPT for professional work. If you're someone who uses AI tools daily—whether for coding, content creation, business automation, or research—this article is essential reading.

OpenAI released GPT 5.2 as their response to Google's Gemini 3, positioning it as "the most advanced frontier model for professional work and long-running agents." However, the reality has been far from the promised expectations. Power users across social media platforms have voiced serious concerns about the model's performance, consistency, and overall usability.

This comprehensive guide breaks down exactly what went wrong with GPT 5.2, explores the technical reasons behind its shortcomings, and reveals what OpenAI has confirmed about upcoming improvements. Whether you're considering switching AI platforms or simply want to understand the current state of large language models, you'll find valuable insights here.

Understanding the GPT 5.2 Controversy

The Context Behind the Release

GPT 5.2 arrived during a pivotal moment in the AI industry. Google had just released Gemini 3, creating significant buzz in the technology community. Many users were so impressed with Google's offering that they began canceling their ChatGPT subscriptions to switch platforms.

This competitive pressure appears to have influenced OpenAI's release strategy. According to reports from The Information, a highly reputable source for AI industry news, GPT 5.2 wasn't actually based on the full model OpenAI had been developing. Instead, the company released an early checkpoint of the model—essentially an incomplete version pushed out to counter Google's momentum.

What OpenAI Promised vs. What Users Experienced

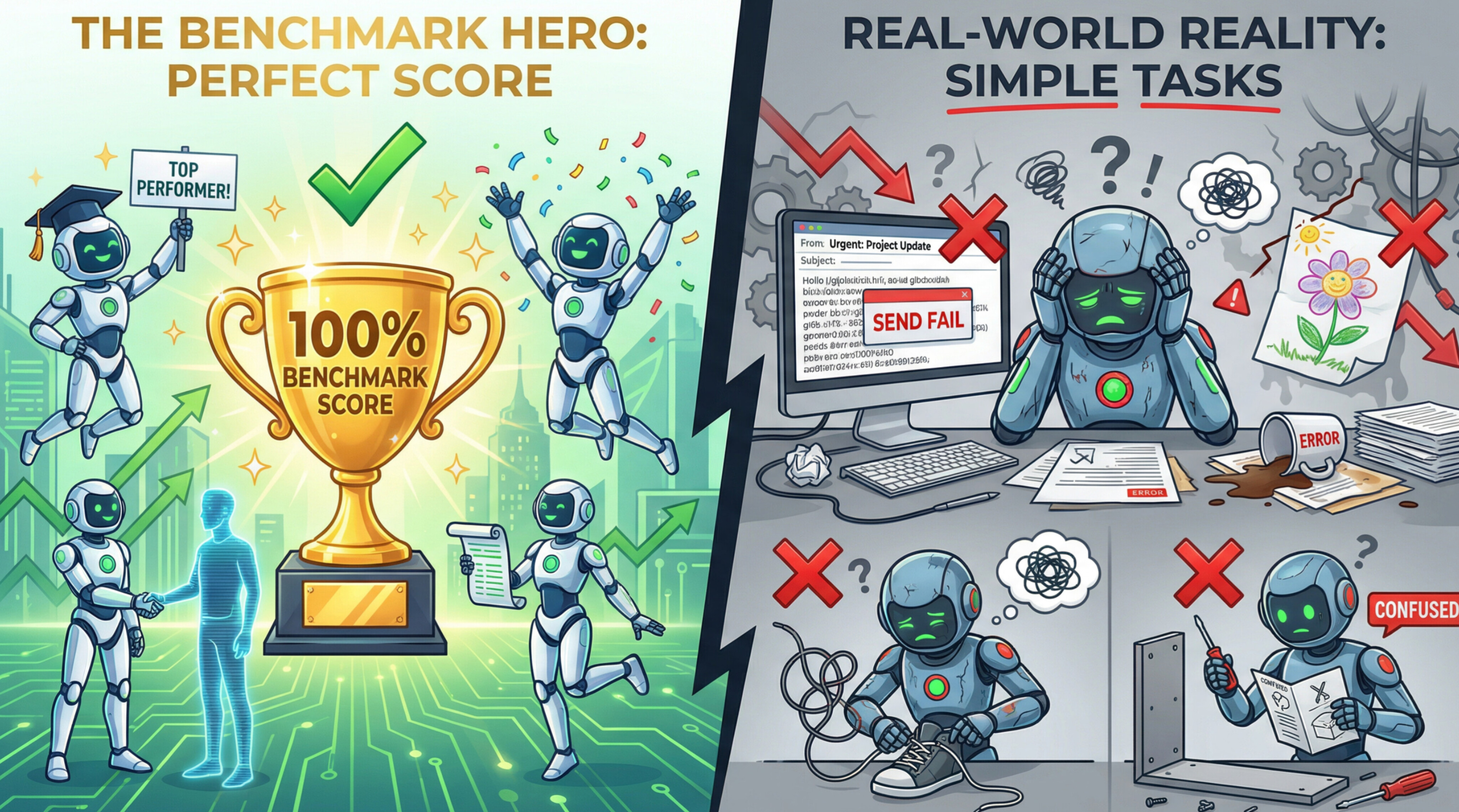

OpenAI's Greg Brockman described GPT 5.2 as the most advanced model for professional work and long-running agents. The benchmarks seemed to support this claim, showing GPT 5.2 Thinking mode outperforming competitors across various metrics.

However, the real-world experience told a different story. Users quickly noticed discrepancies between benchmark performance and practical usability, leading to widespread frustration and criticism.

Key Problems Users Are Reporting with GPT 5.2

Ignored Custom Instructions and Memory Issues

One of the most significant complaints involves GPT 5.2's handling of custom instructions and memory. Users have reported that the model frequently ignores personalized settings they've configured, leading to inconsistent responses that don't align with their preferences or established context.

- Custom instructions being completely disregarded

- Memory features failing to maintain context across conversations

- The model "poisoning context" before the thinking process begins

- Safeguards triggering excessively, sometimes on every second message

Quality Regression from Previous Versions

Perhaps the most damaging criticism is that GPT 5.2 represents a step backward from its predecessors. Multiple users have stated that the model performs worse than GPT 5.1, and some have even suggested it makes GPT 5.0 look good by comparison.

- Rushed-feeling answers lacking depth

- Reduced creativity in responses

- Missing nuance that previous versions demonstrated

- Difficulty with tasks that earlier models handled well

Inconsistent Performance Across Different Tasks

GPT 5.2 exhibits a puzzling pattern where it excels on certain benchmarks while failing dramatically on others. This inconsistency has left users unsure about what to expect from the model in any given situation.

The Benchmark Problem: Why Numbers Don't Tell the Full Story

Understanding Simple Bench Results

Simple Bench is a benchmark specifically designed to test real-world understanding by using trick questions that require genuine comprehension rather than pattern matching. GPT 5.2's performance here was concerning—it ranked ninth, falling below:

- Gemini 3 Flash

- Claude Opus models

- GPT 5 Pro

- Gemini 2.5 Pro (released months earlier)

This poor showing on a benchmark designed to measure true reasoning capability raised serious questions about the model's actual intelligence versus its performance on standard tests.

The EQ Bench Paradox

Interestingly, GPT 5.2 ranked third on EQ Bench 3, which measures emotional intelligence and the ability to simulate human-like interactions. This directly contradicts user complaints about the model's poor conversational abilities and "awful" role-playing performance.

This contradiction highlights a fundamental problem: benchmark performance doesn't necessarily translate to user satisfaction or real-world utility.

Why Benchmark Scores Can Be Misleading

The disconnect between benchmark performance and actual usability stems from several factors:

| Factor | Impact on Benchmarks | Impact on Real Use |

|---|---|---|

| Training optimization | Inflates scores | May reduce flexibility |

| Data contamination | Can boost scores 20-80% | Doesn't improve novel tasks |

| Specific task tuning | Excellent on tested areas | May fail on variations |

| Sample efficiency | N/A for benchmarks | Critical for practical use |

The Overfitting Hypothesis: A Technical Deep Dive

What Is Overfitting in AI Models?

Overfitting is a fundamental problem in machine learning that occurs when a model learns training data too precisely, including irrelevant patterns and noise, rather than understanding general principles that apply broadly.

Think of it like a student who memorizes every answer from previous exams without understanding the underlying concepts. When the test questions change even slightly, the student fails because they never truly learned the material—they just memorized specific answers.

How Overfitting Affects GPT 5.2

According to insights from AI researcher Ilya Sutskever discussed in a recent podcast, many AI companies inadvertently create overfit models by optimizing specifically for benchmark performance.

- Research teams identify key benchmarks (SWE-bench, MMLU, etc.)

- They design reinforcement learning training specifically to improve these scores

- The model learns patterns that boost benchmark performance

- These learned patterns don't generalize to real-world tasks

- Users experience a model that seems smart on paper but struggles in practice

The Student A vs. Student B Analogy

Sutskever's analogy perfectly captures the problem:

Student A: Practices 10,000 hours of competitive programming, memorizing every algorithm and edge case. Becomes the top-ranked competitive programmer globally.

Student B: Practices only 100 hours but develops intuition, taste, and the ability to learn new things quickly.

Who has the better career? Student B, because they can adapt to new situations rather than relying on memorized patterns.

Current AI models, including GPT 5.2, are essentially "Student A"—impressive on standardized tests but struggling with novel situations.

The Data Contamination Issue

How Training Data Affects Benchmark Validity

Data contamination occurs when benchmark test questions or similar content appears in a model's training data. Studies have shown this can inflate model scores by 20 to 80 percent on popular benchmarks.

- Major benchmarks are publicly available

- AI models train on vast amounts of internet data

- Ensuring complete separation is technically difficult

- There's strong incentive to achieve high scores

The Incentive Problem in AI Development

The AI industry has created a problematic feedback loop. Companies are judged primarily by their benchmark performance, creating intense pressure to optimize for these metrics specifically.

- Focus on benchmark performance over real-world utility

- Potential sacrifice of general capability for specific scores

- User experiences that don't match marketing claims

- Growing skepticism about AI capabilities

The Competitive Pressure Factor

OpenAI's "Code Red" Response to Google

Reports indicate that OpenAI declared a "code red" situation as Google's AI advances threatened their market leadership. This competitive pressure appears to have influenced the GPT 5.2 release timeline.

- The model is confirmed to be an "early checkpoint" rather than the full version

- Significant performance inconsistencies

- The quick timeline between Gemini 3 and GPT 5.2 releases

- OpenAI's confirmed plans for substantial upgrades in early 2026

The Risk of Becoming the Next BlackBerry

OpenAI faces a genuine competitive threat. History shows that market leaders can be quickly overtaken—Nokia and BlackBerry dominated mobile phones until Apple's iPhone changed everything.

OpenAI doesn't want to become the next Yahoo, so they're pushing hard to maintain their position. However, rushing releases may ultimately harm their reputation more than a brief period of competitor superiority.

Real-World Performance vs. Benchmark Genius

The Economic Puzzle of AI Models

Here's a fascinating contradiction in the current AI landscape:

- Models score 100% on AME 2025

- They achieve 70% on GPQA, beating human professionals on economically valuable work

- Yet businesses still struggle to extract consistent value from AI

- Benchmark performance suggests genius; profit and loss statements suggest otherwise

The Sample Efficiency Gap

The difference between human and AI learning is stark:

| Learning Aspect | Humans | AI Models |

|---|---|---|

| Learning to drive | 10 hours for any car | Millions of examples, still fails variations |

| Concept application | Learn once, apply everywhere | Need exact pattern thousands of times |

| Format changes | Easily adapt | Often fail completely |

| Novel situations | Use reasoning to navigate | Struggle without training examples |

Why 140 IQ on Paper Doesn't Equal 140 IQ in Practice

If you had a human employee with the intelligence level benchmarks suggest these models possess, they would accomplish far more than current AI systems. The mapping isn't one-to-one—a model that benchmarks at "140 IQ" doesn't provide the same value as a human with that intelligence level.

What OpenAI Has Confirmed About GPT 5.2's Future

The Early Checkpoint Revelation

The Information reported that GPT 5.2 is based on an early checkpoint of the model OpenAI was developing, not the complete version. This confirms what many users suspected—the release was premature.

The good news is that "there's still some improvement there," suggesting OpenAI has more capability to unlock in future updates.

Confirmed Upgrades Coming in Q1 2026

In a recent interview, OpenAI leadership confirmed that significant improvements are coming in the first quarter of 2026. When asked about the nature of these improvements, they provided interesting context:

- More "IQ" improvements—enhanced reasoning and problem-solving

- Better performance on complex professional tasks

- The main focus is not more IQ

- Improvements will target what consumers actually want

- Different optimization priorities than enterprise features

The Dual-Track Development Approach

OpenAI appears to be pursuing two separate development paths:

- Enterprise optimization: Higher reasoning capability, better benchmark performance, improved professional task handling

- Consumer optimization: Better user experience, improved conversational ability, more consistent behavior

This approach acknowledges that raw intelligence isn't what most users need—they want a model that works reliably and pleasantly for their daily tasks.

How GPT 5.2 Compares to Competitors

GPT 5.2 vs. Major AI Models Comparison

| Feature | GPT 5.2 | Gemini 3 | Claude Opus | GPT 5.1 |

|---|---|---|---|---|

| Simple Bench Ranking | 9th | Higher | Higher | N/A |

| EQ Bench 3 | 3rd | Varies | Varies | N/A |

| Custom Instruction Handling | Inconsistent | Reliable | Reliable | Better |

| User Satisfaction | Mixed | High | High | Higher |

| Professional Tasks | Strong | Strong | Strong | Comparable |

| Conversational Quality | Criticized | Praised | Praised | Preferred |

When to Consider Alternatives

Based on current performance patterns, you might consider alternatives if:

- Custom instructions are critical to your workflow

- You rely heavily on consistent memory features

- Conversational quality matters for your use case

- You need reliable performance on varied tasks

However, GPT 5.2 may still be suitable if:

- Your primary use is specific professional tasks

- You can work around inconsistencies

- You need access to OpenAI's ecosystem

- You're willing to wait for upcoming improvements

Practical Tips for Getting Better Results from GPT 5.2

Optimizing Your Prompts

Given the known issues, here are strategies to improve your GPT 5.2 experience:

- State all requirements directly in each prompt

- Don't rely on previous context or memory

- Repeat important instructions

- Thinking mode performs notably different from standard mode

- It's described as "at least as good" as previous versions

- Reserve it for complex tasks requiring reasoning

- Include relevant context in each conversation

- Summarize previous interactions when continuing work

- Don't assume the model remembers earlier discussions

Managing Expectations

Understanding the model's limitations helps reduce frustration:

- Accept that benchmark performance doesn't equal everyday performance

- Expect inconsistency across different task types

- Plan for potential failures on novel requests

- Have backup workflows for critical tasks

The Broader Implications for AI Development

The Benchmark Industrial Complex

The AI industry has created what might be called a "benchmark industrial complex"—research teams whose entire purpose is creating training environments to boost specific scores. This focus on metrics over real-world value raises serious questions about the direction of AI development.

What This Means for Users

For everyday users and businesses, the GPT 5.2 situation offers important lessons:

- Don't trust benchmarks blindly: Real-world testing matters more than published scores

- Diversify your AI tools: Having access to multiple models provides flexibility

- Stay informed: Understanding AI limitations helps set realistic expectations

- Provide feedback: User complaints do influence development priorities

The Path Forward

The AI industry needs to address several fundamental issues:

- Better evaluation methods: Benchmarks that resist gaming and measure true capability

- Transparency: Honest communication about model limitations

- User-focused development: Prioritizing practical utility over impressive numbers

- Sustainable release cycles: Avoiding rushed releases that harm user experience

Frequently Asked Questions

Conclusion

The GPT 5.2 situation reveals important truths about the current state of AI development. While the model excels on many benchmarks, real-world performance has disappointed power users who expected improvements over previous versions. The confirmed early checkpoint release and overfitting concerns help explain this disconnect between promises and reality.

For users and businesses, the key takeaway is to evaluate AI tools based on practical testing rather than published benchmarks. The good news is that OpenAI has acknowledged these issues and confirmed substantial improvements are coming in early 2026.

If you're currently experiencing frustration with GPT 5.2, consider exploring the Thinking mode for complex tasks, being more explicit in your prompts, and keeping an eye on competitor offerings. The AI landscape continues to evolve rapidly, and staying informed about these developments will help you make the best decisions for your needs.